#ai-infrastructure

#ai-infrastructure

[ follow ]

fromFast Company

7 hours agoLooking back at the 5 biggest AI lessons of 2025

AutoDS was bootstrapped and eventually reached 1.8 million users, generated more than $1 billion in user revenue, and exited successfully to Fiverr. From its earliest days, the company was fast moving, the kind of place where speed was strategic and rapid implementation felt like the natural way to operate. But as Pozin's team moved from pilots to production, they learned that speed alone was not enough. AI only delivers results when the right data foundations and ownership structures are in place.

Artificial intelligence

from24/7 Wall St.

13 hours agoSanDisk and Western Digital are the Real AI Kings of 2026

As investors turned their back on software (notably, the seat-based software-as-a-service companies), they're turned towards hardware in a big-time way. You wouldn't know it by looking at those flat shares of Nvidia ( NASDAQ:NVDA), but the iShares Semiconductor ETF ( NASDAQ:SOXX) is up around 13% year to date, with few signs of slowing down. The winners within semis have been broad, but the undisputed kings of the 2026 semiconductor surge belongs to the memory and storage stocks.

Business

fromFortune

13 hours ago'Space-based AI is obviously the only way to scale': Elon Musk hatches grand plan as he merges SpaceX and xAI | Fortune

It's a goal that Musk suggested in his announcement of the deal could become easier to reach with a combined company. "In the long term, space-based AI is obviously the only way to scale," Musk wrote on SpaceX's website Monday, then added in reference to solar power, "It's always sunny in space!" Musk said in his announcement he estimates "that within 2 to 3 years, the lowest cost way to generate AI compute will be in space."

Tech industry

Artificial intelligence

fromArs Technica

1 day agoSpaceX acquires xAI, plans to launch a massive satellite constellation to power it

SpaceX acquired xAI to vertically integrate AI and space capabilities, deploying orbital data centers to scale advanced AI while facing significant controversies and risks.

Marketing tech

fromExchangewire

1 day agoAceex's Emin Alpan on Ad Tech Challenges, Maintaining Stable Performance, and Tougher Privacy Regulations

Privacy regulation, signal loss, platform changes, intensified competition, and required AI/infrastructure investments undermine ad tech performance and demand continuous adaptation.

fromTechCrunch

2 days agoIndia offers zero taxes through 2047 to lure global AI workloads | TechCrunch

As the global race to build AI infrastructure accelerates, India has offered foreign cloud providers zero taxes through 2047 on services sold outside the country if they run those workloads from Indian data centers - a bid to attract the next wave of AI computing investment, even as power shortages and water stress threaten expansion in the South Asian nation.

Tech industry

fromTechCrunch

5 days agoElon Musk's SpaceX and xAI in talks to merge, report says | TechCrunch

SpaceX and xAI, both companies led by Elon Musk, could merge ahead of a planned SpaceX IPO this year, according to a report from Reuters. This would bring products like the Grok chatbot, X platform, Starlink satellites, and SpaceX rockets together under one corporation. Company representatives have not discussed this possibility in public. However, recent filings show that two new corporate entities were established in Nevada on January 21, which are called K2 Merger Sub Inc. and K2 Merger Sub 2 LLC.

Tech industry

Artificial intelligence

fromThe Motley Fool

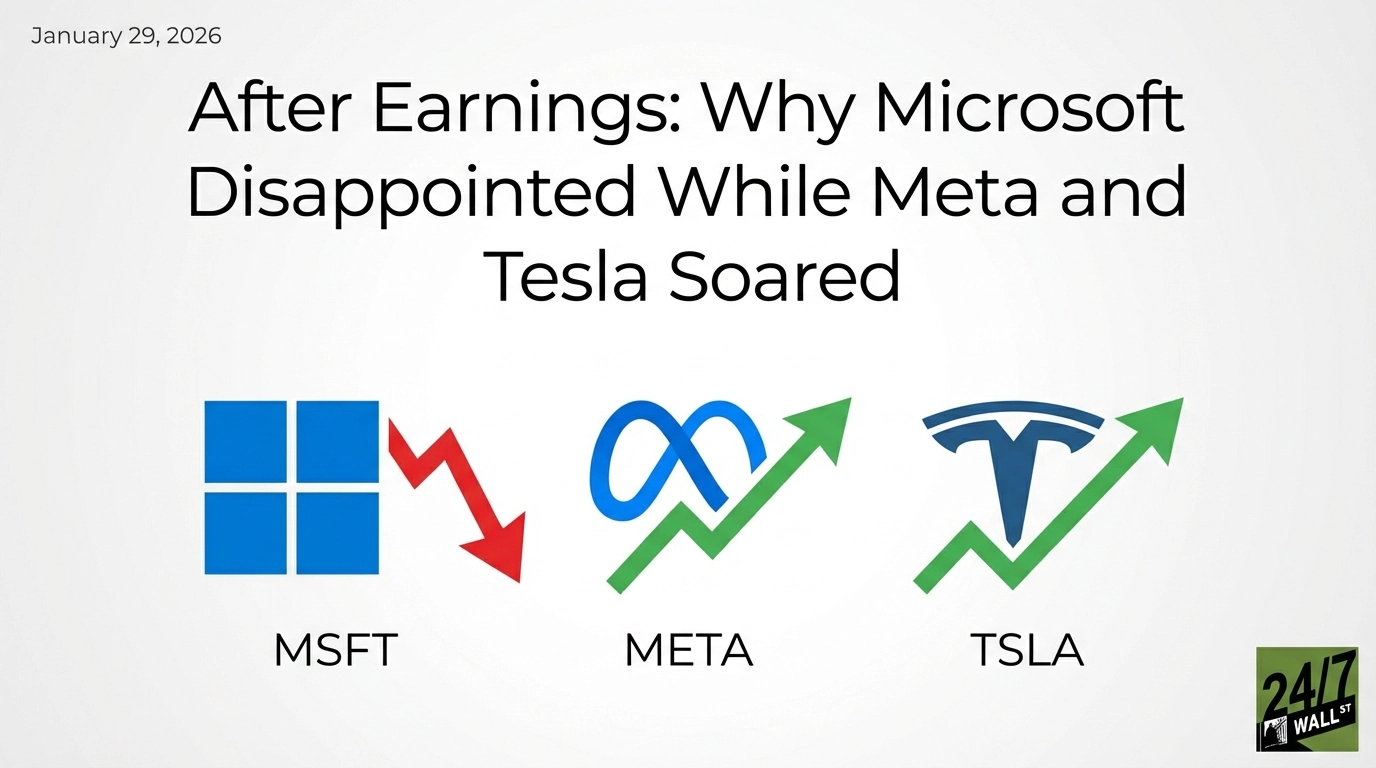

6 days agoMeta Platforms Stock Investors Just Got Fantastic News from CEO Mark Zuckerberg | The Motley Fool

Meta's AI-driven ad tech and expanding user base drove strong Q4 financials, exceeded expectations, and prompted massive 2026 capex plans for AI infrastructure.

from24/7 Wall St.

6 days agoSalesforce Deepens Defense and Wildfire AI Roles With Long-Horizon Deals

The company announced a 10-year, $5.6 billion defense contract with the U.S. Army and joined a $100 million wildfire prevention venture called EMBERPOINT LLC. The Army contract runs through Salesforce's Missionforce National Security subsidiary, accelerating AI-powered decision-making across millions of military personnel. At $560 million annually, this represents 1.4% of Salesforce's $40.3 billion revenue base. Not transformational, but meaningful for a company trading at 18x forward earnings with analysts targeting 33% upside to $329.65.

Business

Artificial intelligence

fromFortune

1 week agoOpenAI CFO Sarah Friar: There's a 'mismatch' between AI's abilities and the value companies are capturing | Fortune

AI is being treated as core economic infrastructure, but most organizations barely capture its capabilities, creating a significant capability overhang.

fromFortune

1 week agoTrump's own Big Beautiful Bill could add $5.5 trillion to the deficit and sabotage his plan to 'grow out' of the national debt crisis | Fortune

"The big thing is growth," responded the POTUS. "Growth is the way we go from high debt to low debt. We're going to be growing our way out, and I think we're going to be paying down debt." Trump's frequently stated that his manifesto that champions sweeping deregulation and domestic manufacturing, alongside the rapid rise of AI-Trump trumpets that he personally orchestrated the technology's single biggest initiative, the $500 billion, multi-partner Stargate data center project-will unleash a productivity revolution igniting a historic surge in productivity.

US politics

Artificial intelligence

fromMedium

1 week agoEnergy Costs Will Decide Which Countries Win the AI Race, Microsoft's Nadella Says

Energy costs will determine national competitiveness in AI; access to low-cost reliable energy and cheap computation tokens drives economic growth and infrastructure investment.

fromTechzine Global

1 week agoDAWN supercomputer gets upgrade and swaps Intel for AMD

The British government is investing heavily in the national computing infrastructure. With an additional investment of approximately $49 million, the DAWN supercomputer at the University of Cambridge is being expanded. This is according to Neowin. This expansion will increase the total computing power of the system by a factor of six. The aim is to enable researchers and technology companies to compete more effectively with players from the United States and China.

UK politics

fromFortune

1 week agoCEOs are bullish but nervous: David Solomon's Davos readout on deregulation and 'shotgun' policy | Fortune

Speaking on the Goldman Sachs Exchanges podcast on Jan. 20, ahead of his trip to Davos, Solomon described a business landscape defined by a sharp dichotomy. On one side, the macroeconomic setup for 2026 is "pretty good for risk assets and for markets," fueled by a "confluence of very stimulative actions," including monetary easing and a massive capital investment boom in AI infrastructure. On the other, executives are grappling with anxiety about inconsistent policymaking and geopolitical "noise."

Business

fromBusiness Insider

1 week agoOpenAI is making more than $1 billion a month from something that has nothing to do with ChatGPT

OpenAI has pulled in a billion-dollar month from something other than ChatGPT. Sam Altman said in a post on X on Thursday that OpenAI added more than $1 billion in annual recurring revenue in the past month "just from our API business." "People think of us mostly as ChatGPT, but the API team is doing amazing work!" the OpenAI CEO wrote.

Artificial intelligence

Artificial intelligence

fromFortune

1 week agoElon Musk warns the U.S. could soon be producing more chips than we can turn on. And China doesn't have the same issue | Fortune

Electrical power shortages and grid limitations are the primary constraint on U.S. AI deployment despite rapidly increasing chip production.

fromComputerWeekly.com

1 week agoDavos 2026: Smart thinking needed for sovereign AI investment | Computer Weekly

Policy-makers are being urged to focus on investments in artificial intelligence (AI) in a way that makes sense for the economy. The Rethinking AI sovereignty paper, published to coincide with the World Economic Forum (WEF) meeting in Davos, recommends that policy-makers reframe AI sovereignty as strategic interdependence, where localised investments are combined with trusted partnerships and alliances. The paper, co-authored by the World Economic Forum and Bain & Co, presents data that illustrates the gap between AI infrastructure investment in the US and China compared with other countries.

Artificial intelligence

fromwww.engadget.com

1 week agoElon Musk is reportedly trying to take SpaceX public

Elon Musk is reportedly looking to finally take SpaceX public after years of resistance, according to sources who spoke to The Wall Street Journal. The company has long said it wouldn't choose an IPO until it had established a presence on Mars. That isn't happening anytime soon. So why now? Company insiders have suggested it's because Musk wants to build AI data centers in space. Google recently announced it was looking into putting a data center in space, with test launches scheduled for 2027.

Artificial intelligence

from24/7 Wall St.

1 week ago3 International Growth Stocks That Can Compete With U.S. Technology

Silicon Motion Technology ( NASDAQ:SIMO) is a Hong Kong semiconductor firm that specializes in NAND flash controllers. These memory storage solutions are critical for the AI buildout, and investors have started to pick up on it. The company's stock has more than doubled over the past year and is up by more than 20% to start the year. Revenue increased by 14% year-over-year in Q3 2025, and most of the growth was driven by AI infrastructure.

E-Commerce

from24/7 Wall St.

1 week ago3 Stocks That Could Double In 2026

Micron Technology ( NASDAQ:MU) could be the biggest beneficiary of the next bull market. It ended the year with a strong financial profile and growing demand for its solutions. Exchanging hands for $365, the stock has gained 233% in the past year. The demand for its NAND, DRAM, and high-bandwidth memory has outpaced supply and expanded margins. In the recently announced results, the company reported $13.64 billion in revenue, beating estimates. It was an impressive 57% year-over-year jump, driven by the growing demand for its specialized memory chips.

Business

fromBusiness Insider

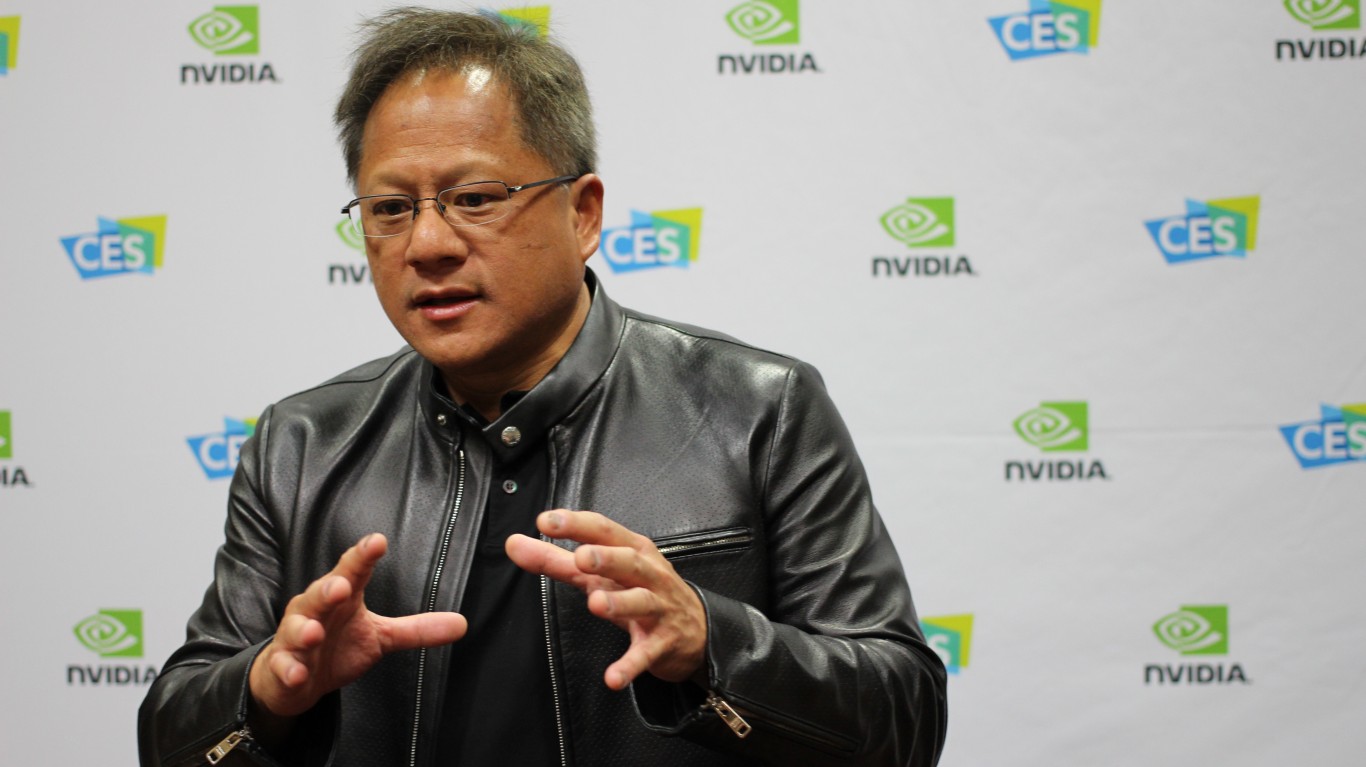

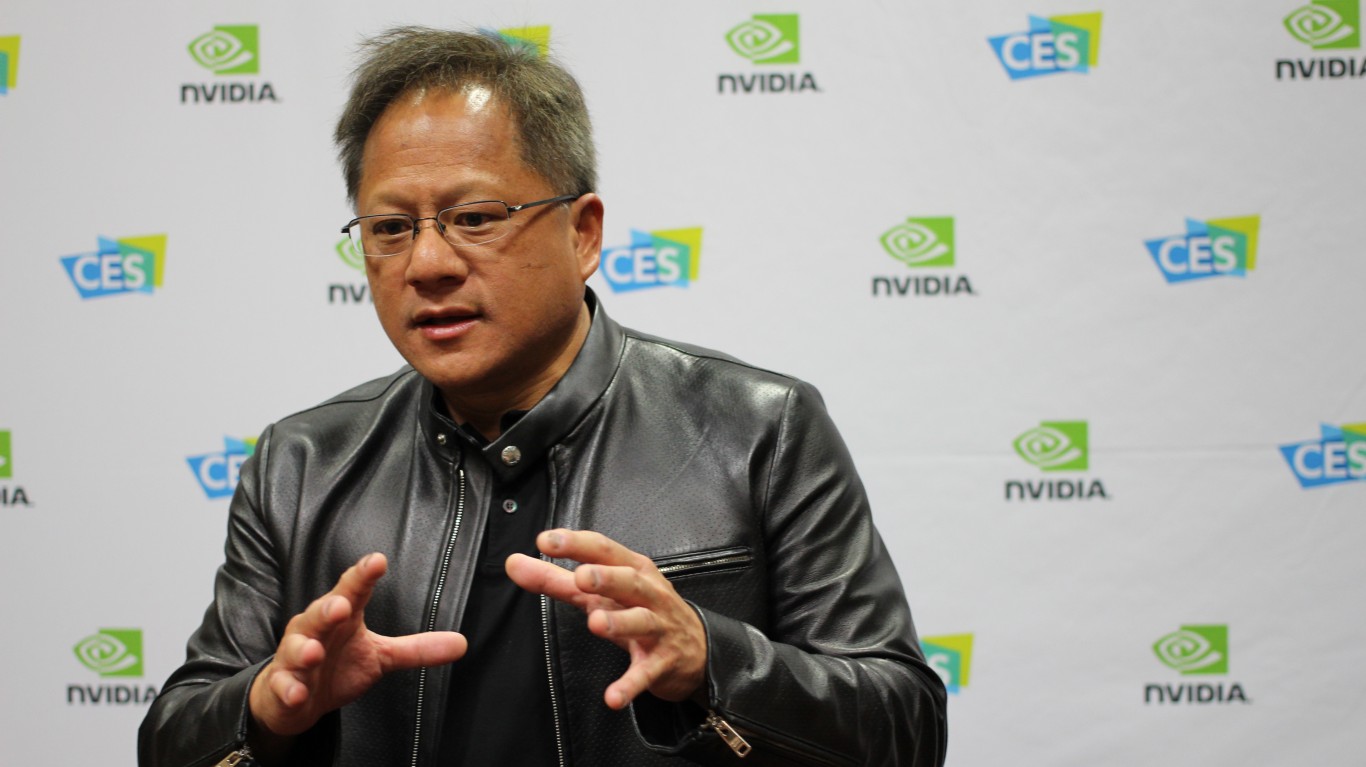

1 week agoNvidia's Jensen Huang says it's a good time to be a plumber - and not just because it's an AI-proof job

Is AI coming for your job? If you work in construction or plumbing, that's perhaps not a question you need to worry about. Speaking at the World Economic Forum on Wednesday, Nvidia CEO Jensen Huang said it was a great time to be a tradesperson because the AI boom is creating demand for manual labor to build data centers. "It's wonderful that the jobs are related to tradecraft and we're going to have plumbers and electricians and construction and steelworkers," he said in a conversation with BlackRock CEO Larry Fink in Davos, Switzerland.

Artificial intelligence

[ Load more ]