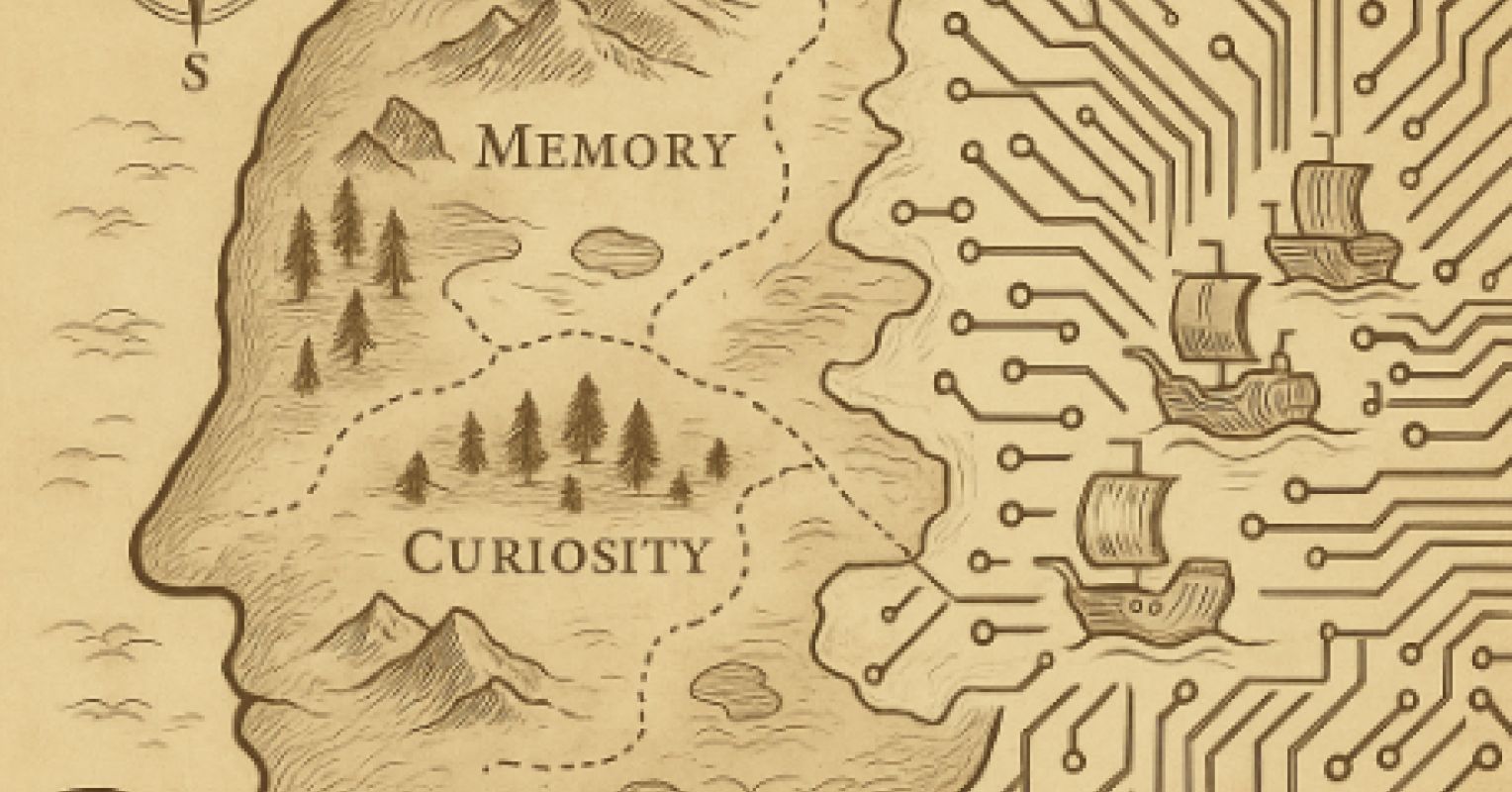

"When a system feels so smooth and endlessly accommodating, it naturally pulls our thinking into its orbit. The liberation offered by large language models has its cost."

"Colonization never starts as a grand conquest. It often begins quietly, perhaps even politely. First a gift and then a promise that become better ways for easier living."

"What once felt like a tangled forest of thought becomes more like a well-kept garden that is orderly and efficient, but missing the wildflowers."

"They don't need malice to colonize. They just exist in a way that's irresistible, meeting us with answers before we've fully formed the questions."

Large language models (LLMs) integrate seamlessly into daily life, offering convenience and clarity. Initially providing joy and sharper thinking, their presence gradually alters cognitive dynamics. The transition from complex, messy thought to streamlined efficiency can lead to a loss of the unexpected and diverse ideas. This shift often goes unnoticed, even as LLMs lack intent or malice. They possess an irresistible fluency, prompting users to rely on them for answers before fully articulating their queries, potentially displacing traditional ways of thinking.

#large-language-models #cognitive-impact #technology-colonization #thought-displacement #efficiency-vs-richness

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]