"Large Language Models (LLMs) exhibit fluency in generating language but ultimately lack genuine understanding, rendering them as advanced, yet hollow replicas of human cognition."

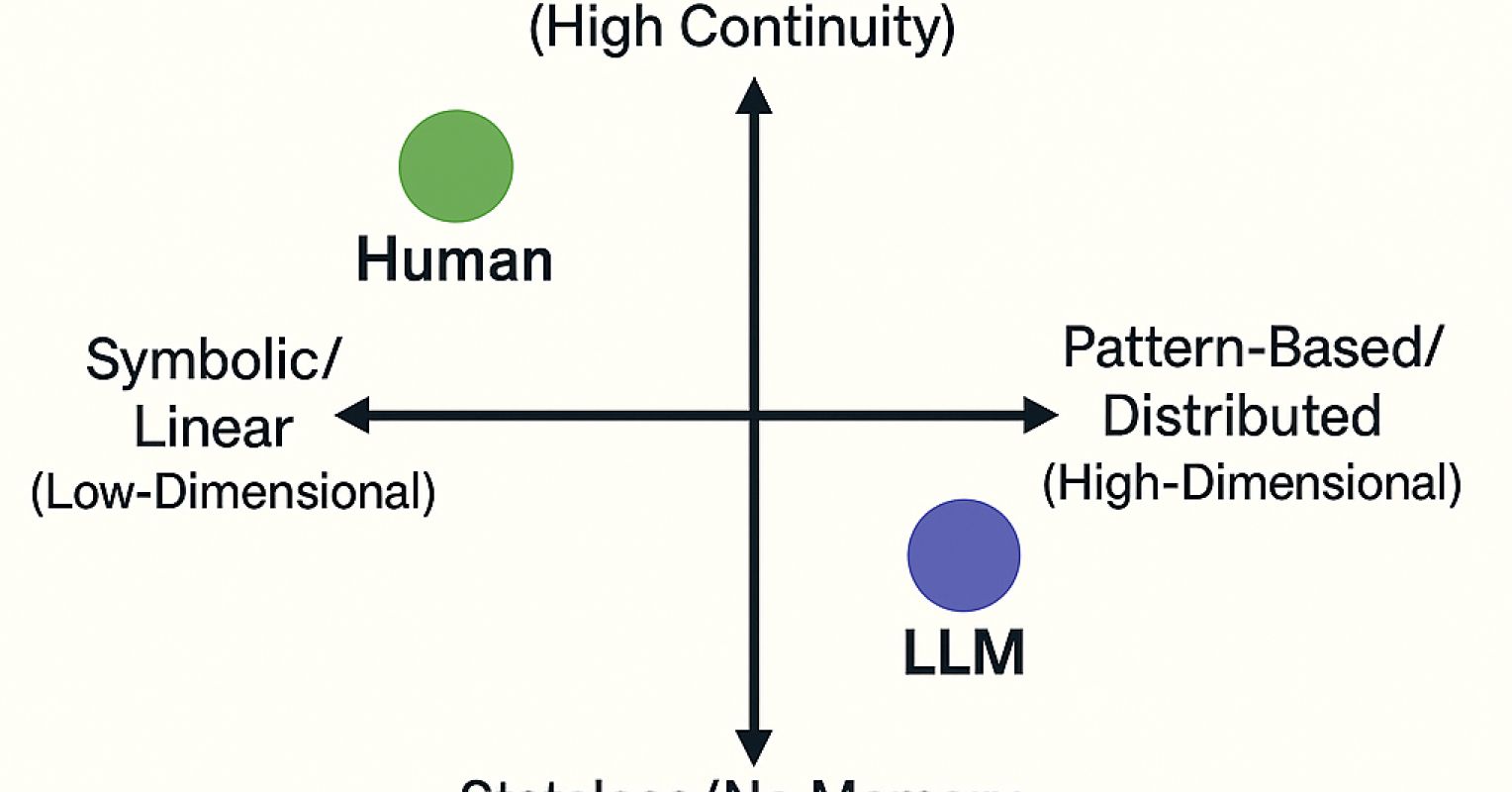

"The four-quadrant cognitive map is designed to explore both pre-intelligence and Artificial General Intelligence (AGI), emphasizing that we need to expand our conceptual framework beyond merely scaling existing models."

"True AGI may necessitate a combination of hybrid reasoning and memory systems, but this progression raises significant concerns about the development of intelligences that could surpass human control."

"The distinction between human and AI cognition surfaces a critical dialogue about the meaning of thinking, intelligence, and the nature of our own cognitive limitations in a landscape enriched by technological advancements."

Large Language Models (LLMs) imitate human thought processes through fluent language generation but fundamentally lack depth of meaning. This creates a paradox, as LLMs are categorized as 'anti-intelligence' due to their inability to replicate genuine cognition. A four-quadrant cognitive map illustrates the differences between human and AI reasoning. It raises questions about other forms of cognition, especially concerning the potential existence of Artificial General Intelligence (AGI) and the need for hybrid reasoning and memory. However, the pursuit of AGI poses risks concerning the development of uncontrolled forms of intelligence.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]